How FDA, Industry, And Academia Are Guiding AI Development In The Life Sciences

Continuously learning systems (CLS) have shown great promise for improving product quality in the pharmaceutical and medical device industries. These artificial intelligence (AI) algorithms constantly and automatically update themselves as they recognize patterns and behaviors from real-world data, enabling companies to become predictive, rather than reactive, when it comes to quality assurance. However, the output for the same task can change as a CLS algorithm evolves. This stands in sharp contrast to systems traditionally used in the life sciences, which are validated and expected to not change — performing exactly the same way each time they are used.

A team of FDA officials and industry professionals working through Xavier Health’s Artificial Intelligence (AI) Initiative are tackling this issue by developing good machine learning practices (GmLPs) for the evaluation and use of CLS. A primary objective of the Xavier Health CLS Working Team has been to identify how one can provide a reasonable level of confidence in the performance of a CLS in a way that maximizes the advantages of AI while minimizing risks to product quality and patient safety. (A second Xavier Health team of industry professionals is actively exploring the use of AI for continuous product quality assurance (CPQA), as discussed in a previous article.)

The team includes members from pharmaceutical, medical device, and computer technology companies, and from academia and FDA. FDA involvement is critical in this effort, as both industry and regulatory agencies need to trust the science behind the AI and evolve their understanding of AI together.

At the Xavier Health AI Summit in August 2018, members of the CLS team discussed the recently completed primary deliverable from the first phase of their work — a white paper, “Perspectives and Good Practices for AI and Continuously Learning Systems in Healthcare.”

Regarding the AI effort, Xavier Health Director Marla Phillips commented, “Some people feel that the use of AI is irresponsible. But honestly, we are at the point in our industry, with decades of data sitting on the shelf unused, that we are irresponsible for not using AI. Think about all the predictive trends sitting on the shelf that could prevent failures. Think of the diagnoses that go undiagnosed, or the cures left unused. It is time to take action.”

Learning Systems Provide Opportunities And Challenges

One of the two team leaders for the CLS Phase 1 effort has been FDA Center for Devices and Radiological Health (CDRH) Senior Biomedical Research Scientist Berkman Sahiner. Sahiner kicked off the CLS team presentation by providing some background on the team’s work, including opportunities and risks with AI systems, and questions the team endeavored to answer as it examined the use of systems that continuously learn.

“To be truly intelligent, an AI system cannot be frozen in time,” he commented. “It has to continuously change and mold into the future.”

There are “a lot of opportunities” with continuously learning systems, Sahiner said. “They can use an ever enlarging data set for algorithm training and improvement. They can adapt to the environment. So if the environment is changing, the continuously learning algorithm can track the environment and can even adapt to individual patients. It might work differently for different patients depending on their needs.”

On the other hand, there are also some potential risks — for example, there might be unintended and undetected degradation of performance over time. This could be due to data quality, inadequate testing, or statistical variations.

Another risk is the potential for “a lack of human involvement in learning and/or in updates,” Sahiner said. And there may be “incompatibility of results with other software that uses the output of the evolving algorithm.”

Sahiner presented a list of questions the team has been focusing on, including the following:

- If I were a potential user in the medical field, what types of tests or design processes would provide me reasonable assurance to accept and use a CLS?

- How do we ensure that the updates due to continuous learning do not compromise the safety and effectiveness of the associated device?

- How do we control the quality of new data?

- How do users adapt to an evolving algorithm?

- When and under what conditions should an algorithm update occur?

- Can and should performance testing be conducted after each algorithm modification?

Regarding the last question, Sahiner said that devices including AI are normally tested once they are designed, and that gives the specifications for performance. “But now these devices seem to be in a constant design phase. How often or when should performance testing be re-done?”

What Is Unique About CLS?

Sahiner’s co-leader for the Phase 1 CLS Team, Abbott Laboratories Quality Head for Informatics and Analytics Mohammed Wahab, explained that a primary goal of the team was to focus on what is unique about continuously learning systems.

“The predominant topic that is out there is about artificial intelligence,” he said. “There is a lot of confusion about what it is and what it isn’t. There is a new article or a new paper out about it every day, if not more frequently.”

One of the team’s purposes, Wahab said, “was to take it one step further to look at what the aspects of continuous learning are that can inform us about how we need to develop our systems and solutions and can pave the path forward. That was a key part that was somewhat hard for us as we embarked on this journey.”

One approach was to look at specific use cases and analyze them as applied to the product lifecycle. “This was not about looking at the lifecycle and looking for the common attributes that we need to address. Instead, it was about identifying the unique attributes of CLS that we need to address within the lifecycle.”

The list of considerations “formed the core of our document. What we discovered was that many of these considerations apply across the lifecycle, so we honed in on those that were unique for particular stages of the lifecycle. You will find both in the white paper.”

Written With Standard Development In Mind

Philips Regulatory Head of Global Software Standards Pat Baird explained that the primary deliverable for the CLS team was a white paper covering best practices for a CLS and how they can be adapted into healthcare. Baird was a co-chair of the CLS medical device sub team from the Phase 1 work and one of the primary authors of the white paper. He is now serving as a co-leader of the entire Phase 2 CLS team.

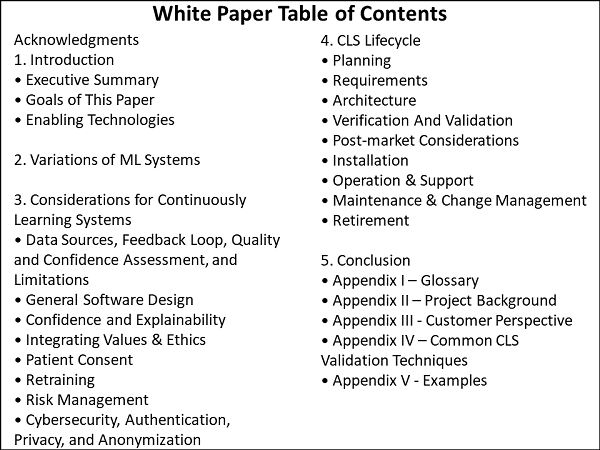

Baird explained that the white paper assumes the reader understands how to write software and requirements, so its focus is on aspects of CLS that differ from other systems. He presented a slide depicting the table of contents of the white paper (see below). He pointed out that the body of the paper is was intentionally kept concise and is only 18 pages long, with the remainder comprised of appendices. “Please take 20 minutes and read it,” he asked.

Baird explained that while the white paper is not intended as a standard, the team anticipated a standard would be written in the future. “When it came to lifecycle planning, I just copied the lifecycle stages from the IEC 62304 medical device software standard and put them in here and asked what was different for CLS during each of these stages.”

In some of the lifecycle stages, the team did not find any differences between IEC 62304 and CLS, so those stages were removed. This was done to “lay the groundwork for future standards work.” The appendices take a “much deeper dive into validation of CLS systems and provide a number of examples.”

Changing Practices And Software Versions Spark Questions

Baird discussed the challenges with healthcare practices that change over time. “How will that impact your data?” he asked. “Is there a driving force behind your data that was perfectly fine when you first had that data and were doing the curation, but that might change a few years later?”

One example would be information in public papers that gets updated, changed, or even rescinded. It is important to consider how those situations would be handled if the information was used in a CLS.

Recalls or performance drifting may require performing a rollback to a previous version of a CLS. “But if there is a rollback on an existing patient, what do you do?” Baird asked. “If you are diagnosed with one version and your progress is being tracked with a different version, does that introduce some things that should be considered? Do you need start trending rollbacks? Is that a signal that a deeper investigation is needed? Or, if we have a new version that is significantly better than an old version, what do we do with existing patients? Should they be reevaluated? How do we handle those kinds of things?”

Software version control is an issue for all manufacturers. However, Baird maintained, CLS systems could have multiple versions to track. As updates are pushed out, some will chose not to take the update, while others will update every time a new version is released.

“How is that going to impact complaint trending?” he asked. “How do we deal with getting real-time data back when a lot of our systems are not organized that way — or at least not yet?”

He also discussed the possibility of more continuous monitoring of the performance and questioned whether it would make sense to consider the concept of a continuous benefit/risk analysis that evaluates all the updates and the performance.

“Since it is always being refined, in a way it is always in the design stage,” Baird said. “Of course there is verification and validation involved with it, but in a way it is always in the design stage if it keeps updating itself.”

Does AI Require Novel Regulatory Approaches?

At the beginning of the project, Baird said, he expected that new regulatory approaches would be needed for CLS. “Because, after all, AI is magic. It is a black box that might have a couple of wormholes into it. There is a lot of mystery around this.”

He pointed out that cGMP 820 for medical devices was last updated in 1996, and the first cGMPs date from 1976, when the Medical Device Regulation Act was passed. “So, we might need something different from regulation” to keep up with the rate of change and innovation in AI.

Comparing the challenges of regulating AI systems to regulating a manufacturing plant, however, he found parallels that may indicate a vastly different approach is not needed.

While data quality is important, it is not new. In manufacturing, the quality of the raw materials impact the quality of the finished product. That quality is controlled by supplier agreements and incoming inspection processes.

In addition, while CLS performance changes over time and needs to be tracked, this is no different than checking product performance against a known standard. It is also synonymous with checking lab equipment against standards and recalibrating when needed.

“Maybe we have a ‘golden’ set of data that we use, and as things are evolving over time we periodically or event-based check against this gold set of data to see if the software is performing within the specification,” Baird suggested. “This is already a paradigm that we know and are using in a different part of the factory. So maybe things aren’t as new and scary as I thought they would be.”

Explainability And Trust Must Be Addressed

Abbott’s Wahab address the importance of users trusting the AI, noting that, “If no one follows the advice of what the software says, if people do not believe the software, we are not making a positive impact. Explainability — the notion of transparency, the notion of building confidence — is extremely critical beyond just the technology in making these solutions effective, particularly in healthcare.”

User bias and negative perception can cause a user not to trust the software. For example, a user that has had poor success with a previous version of a CLS may be highly skeptical of future releases, regardless of any objective evidence the developer may have about improvements to the product.

There is additional pressure on CLS from evolving governmental requirements. One example is the EU General Data Protection Regulation (GDPR) that “defines strong legal stipulations about what the EU defines as transparency and the rights of individuals to ask for traceability in terms of how a particular decision or recommendation was achieved thorough software,” Wahab said.

“We know that that journey is not always possible in some of the advanced AI technologies that we are talking about. So we have a challenge, and perhaps an opportunity, too, to get to a point of helping us and the folks around us, and the industry at large, to define the mechanisms that we need to put into our software development systems to actually build and get to, not a perfect transparency orientation, but what is good enough. That is a large part of what we hope to get to in the next phase of this exercise.”

Vanderbilt University School of Medicine Anesthesiology, Surgery, Biomedical Informatics and Health Policy Professor Jesse Ehrenfeld — who is currently Secretary of the Board of Trustees of the American Medical Association — commented on what physicians and surgeons need in order to use, trust and rely upon AI outcomes. They want to be part of product development to ensure at the end that the application is “simple, usable and beautiful,” he said.

Avoiding Unanticipated Outcomes

The next phase of work the CLS team will focus on this problem statement: “Continuously learning systems bear the risks of unanticipated outcomes due to a lack of human involvement in the changes, unintended (and undetected) degradation in time, confusion for users, and incompatibility of results with other software that may use the output of the evolving algorithm.”

The goal is “to increase the confidence in output derived through continuously learning artificial intelligence processes such that humans can confidently make critical decisions from these outcomes. A key emphasis will be on the ability to explain how and why the underlying artificial intelligence algorithms evolved to make better decisions or predict different outcomes.”

Baird noted that “convincing ourselves as designers and the FDA will be different than convincing a surgeon, though there may be some overlap.” He also provided examples showing the dangers of having “too much trust.”

The first example was a hospital study in which caregivers were shown fake lab results for fake patients on a tablet. There was a “general tendency” for caregivers over the age of 35 to be more likely to challenge the results and order another lab. Caregivers under the age of 35 were more likely to believe the lab results and proceed with the next steps. “There were questions about how much they were trusting it because it was on their tablet,” Baird said.

In a second example, during California wildfires that took place earlier this year, the Los Angeles Police Department sent out a notice telling people to stop following their navigation apps. The apps indicated that there was no traffic in certain areas where the fire had spread, inadvertently directing people into danger. “This was probably something the people who developed the apps did not take into account in their initial software development,” Baird commented.

These examples, he said, illustrate the “interesting tension” between wanting to get user trust and for them to believe it to improve patient outcomes, “but not having too much trust.”

The dialogue on the use of AI in what has been termed “the fourth industrial revolution” — a fusion of technologies that is blurring the lines between the physical, digital, and biological spheres — will continue at the FDA/Xavier PharmaLink Conference in March 2019. Participants will be exposed to some of the latest technological tools and shown how the tools are being used to rewrite how pharma manufacturing operations, quality systems, and supply chains are being designed and executed.

About The Author:

Jerry Chapman is a GMP consultant with nearly 40 years of experience in the pharmaceutical industry. His experience includes numerous positions in development, manufacturing, and quality at the plant, site, and corporate levels. He designed and implemented a comprehensive “GMP Intelligence” process at Eli Lilly and again as a consultant at a top-five animal health firm. Chapman served as senior editor at International Pharmaceutical Quality (IPQ) for six years and now consults on GMP intelligence and quality knowledge management, and does freelance writing. You can contact him via email, visit his website, or connect with him on LinkedIn.

Jerry Chapman is a GMP consultant with nearly 40 years of experience in the pharmaceutical industry. His experience includes numerous positions in development, manufacturing, and quality at the plant, site, and corporate levels. He designed and implemented a comprehensive “GMP Intelligence” process at Eli Lilly and again as a consultant at a top-five animal health firm. Chapman served as senior editor at International Pharmaceutical Quality (IPQ) for six years and now consults on GMP intelligence and quality knowledge management, and does freelance writing. You can contact him via email, visit his website, or connect with him on LinkedIn.